Kubernetes is the de facto standard in container orchestration. It gives businesses the tools and capabilities needed to self-host their applications on-premises or in the cloud with the flexibility, scalability, and high availability needed for critical applications. Proxmox is a highly capable hypervisor and a great platform for hosting your Kubernetes infrastructure. This post will detail the steps to host Kubernetes on Proxmox and the essential commands required to manage your environment.

Table of Contents

What is Proxmox?

Before we dive into setting up a Kubernetes cluster on Proxmox, let’s briefly discuss the two technologies at the center of this guide.

Proxmox VE is an open-source virtualization platform that allows users to create and manage virtual machines (VMs) and LXC containers. Its intuitive web-based user interface, Proxmox UI, makes it easy to manage resources, monitor performance, and maintain a virtual environment.

Proxmox is a powerful virtualization platform

What is Kubernetes?

Kubernetes, on the other hand, is a powerful container orchestration platform designed to automate the deployment, scaling, and management of containerized applications. Running Kubernetes on Proxmox offers several advantages, including better resource utilization, increased flexibility, and improved scalability.

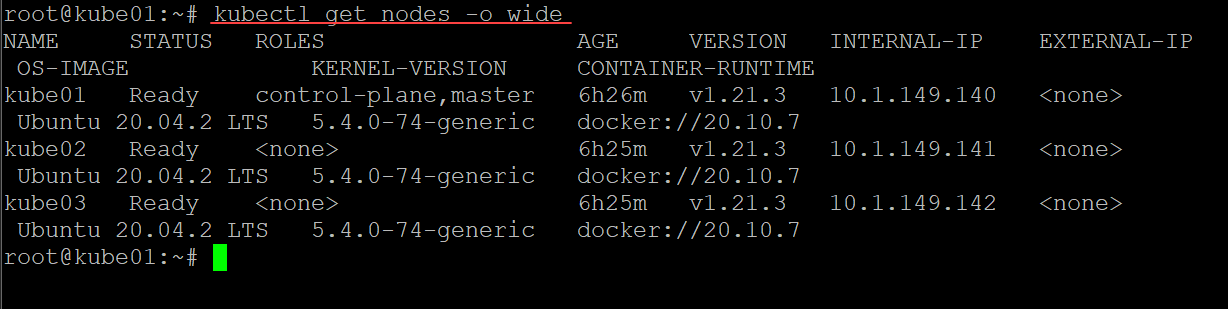

Using kubectl to get all the nodes in the K8s cluster

Prerequisites

To successfully follow this tutorial, you’ll need:

- A Proxmox host with a static IP address and at least three additional IP addresses for your Kubernetes nodes.

- Three virtual machines running Ubuntu Server.

- A local machine or cloud provider to run kubectl commands.

Setting Up the Proxmox Environment

- Create virtual machines

First, you’ll need to create three new VMs for your Kubernetes cluster on the Proxmox host. These VMs will serve as the master and worker nodes in your cluster. Configure each VM with a static IP address and ensure that they have sufficient resources to run your desired workloads.

- Install Ubuntu Server

Once the VMs are created, install Ubuntu Server on both worker nodes. Follow the standard installation process, making sure to configure each VM with a unique hostname and the previously assigned static IP addresses.

- Update and reboot

After installing Ubuntu Server, update the packages on each worker node, and reboot the VMs. This will ensure that your VMs are running the latest software and are ready for the next steps.sudo apt-get update && sudo apt-get upgrade -y

- Configure SSH

To manage your Kubernetes cluster, you’ll need to access the worker nodes via SSH. Configure the SSH server on each Ubuntu VM and ensure that you can establish an SSH session from your local machine or cloud provider.

Installing Kubernetes on the Proxmox Cluster

- Install Docker

Kubernetes relies on a container runtime like Docker to run its workloads. On each worker node, install Docker by following the official Docker installation guide for Ubuntu.

- Disable swap

Edit the configuration file, typically located at the following file: /etc/fstab

From a shell prompt you can type the following line to disable swap memory usage:

##Disable Swap

sudo swapoff -a && \

sudo nano /etc/fstab

On the fstab file, comment out the line referring to the swap file. After editing the config file, restart the Docker service and verify that the changes have been applied.

- Install kubectl and Kubernetes components

The kubectl command is the command-line tool used to manage Kubernetes clusters. Install kubectl on your local machine or the cloud provider you’re using for cluster management. Follow the official installation instructions for your operating system to ensure you have the correct version of kubectl.

However, in general, you can use the following to install the components needed, including kubectl, kubeadm, and the kubelet in Ubuntu Server:sudo apt update && \ sudo apt install kubeadm kubelet kubectl kubernetes-cni -y

Setting Up the Kubernetes Cluster

Now that we have the needed components installed for spinning up a new Kubernetes cluster, let’s look at that process and the steps involved.

Initialize the master node

Choose one of the worker nodes to act as the master node in your Kubernetes cluster. On the master node, run the following command to initialize the cluster:

sudo kubeadm init --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=MASTER_NODE_STATIC_IP

Replace MASTER_NODE_STATIC_IP with the static IP address of the master node. This command will set up the control plane and generate a join command that you’ll use to connect the other worker nodes to the cluster.

Be sure and copy the join command to a notepad or other text file. However, you can also retrieve the join token if you didn’t copy it down as we will see below.

Configure kubectl on the master node

After initializing the master node, configure kubectl to use the newly created cluster. To do this, run the following commands on the master node, which copies the kubeconfig into the home directory of your user account:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

Set up the pod network

Kubernetes requires a pod network for communication between the nodes. In this tutorial, we’ll use Flannel as the network provider. To install Flannel, run the following command on the master node:

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

Join the worker nodes to the cluster

Now it’s time to join the other worker nodes to the cluster using the join command generated during the master node initialization. The join command will look something like this:

sudo kubeadm join --token YOUR_TOKEN YOUR_MASTER_NODE_STATIC_IP:6443 --discovery-token-ca-cert-hash sha256:YOUR_HASH

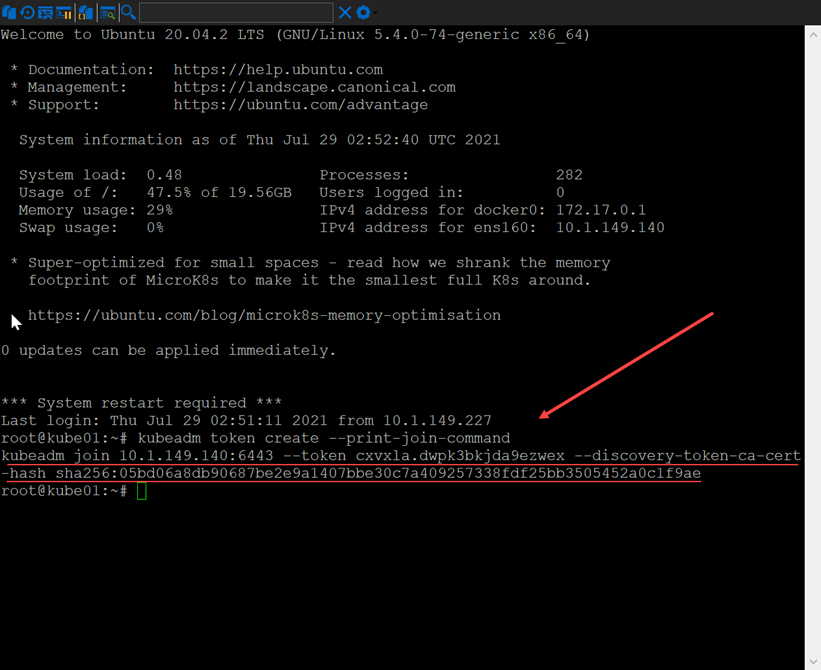

Printing the join command for joining worker nodes to the cluster

Replace YOUR_TOKEN with the token provided by the kubeadm init output, YOUR_MASTER_NODE_STATIC_IP with the static IP address of the master node, and YOUR_HASH with the discovery token CA cert hash from the output.

Creating and Retrieving the join token

To retrieve the join command if you didn’t save it during the initialization, you can run the following command on the master node:

sudo kubeadm token create --print-join-command

This will generate a new token and print the join command with the updated token and hash.

Run the join command on each worker node, making sure to replace the necessary tokens and IP addresses as required. This will connect the worker nodes to the master node and form the complete Kubernetes cluster.

Note. If you encounter any issues with the token expiration, you can create a new token on the master node using:

sudo kubeadm token create

Then update the join command accordingly. Verify the cluster setup.

Once all worker nodes have been joined to the cluster, you can verify the cluster nodes setup by running the following command on the master node:

kubectl get nodes

This command will display the status of all the nodes in the cluster. Ensure that all nodes have a “Ready” status before proceeding.

Using the wide command to display further details about the Kubernetes cluster nodes

Managing Your Kubernetes Cluster on Proxmox

- Accessing the Proxmox UI

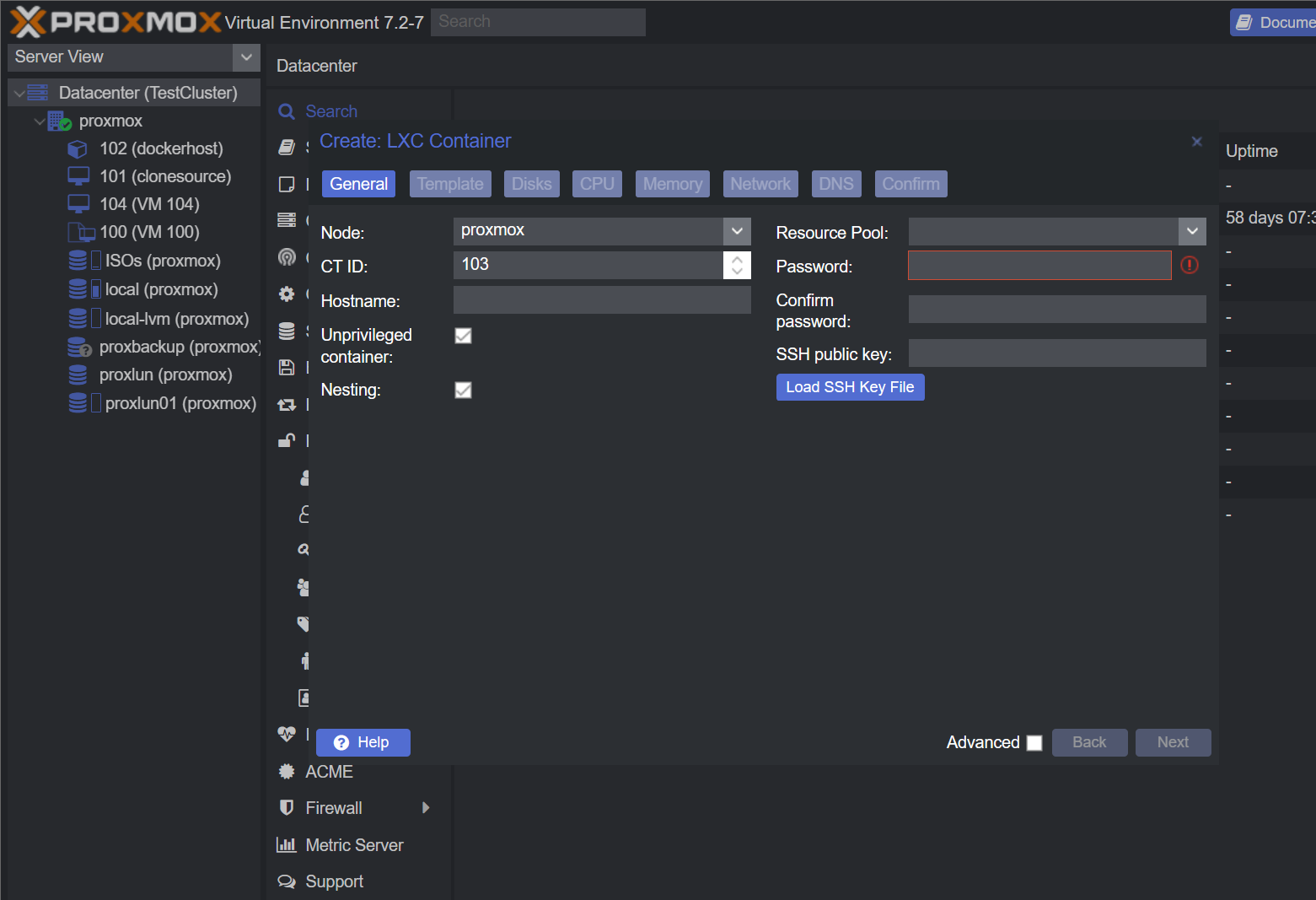

To manage your Kubernetes cluster, you can use the Proxmox UI to monitor the performance and resource usage of your VMs. Access the Proxmox UI by navigating to the static IP address of your Proxmox host in a web browser. - Creating and managing LXC containers

In addition to running Kubernetes on Proxmox VMs, you can also create and manage LXC containers directly through the Proxmox UI. LXC containers offer a lightweight alternative to VMs for running containerized applications.Creating an LXC container in Proxmox

- Scaling your cluster

As your workloads grow, you may need to add additional nodes to your Kubernetes cluster. To do this, simply create new VMs on the Proxmox host, install the necessary components, and join them to the cluster using the join command.

Troubleshooting Kubernetes on Proxmox: Common Issues and Solutions

Encountering issues while setting up or managing a Kubernetes cluster on Proxmox is not uncommon. In this section, we’ll cover some common problems and their solutions to help you troubleshoot your Kubernetes on Proxmox deployment.

Kubernetes nodes not joining the cluster

If your worker nodes are unable to join the cluster after running the join command, make sure to check the following:

- Ensure that you’re using the correct join command with the right token and IP address.

- Verify that the token has not expired. If it has, create a new token on the master node using sudo kubeadm token create and update the join command accordingly.

- Check the firewall settings on both the master and worker nodes to ensure they allow the necessary traffic for Kubernetes communication.

Pods stuck in the “Pending” state

If your pods are stuck in the “Pending” state, it could be due to several reasons:

- Insufficient resources: Ensure that your worker nodes have enough CPU, memory, and storage resources to accommodate the pod’s requirements.

- Pod network issues: Verify that the pod network has been correctly set up and that the network plugin is functioning properly.

- Persistent volume claims: If your pod relies on a persistent volume, ensure that the storage class is properly configured and that there’s available storage to satisfy the claim.

Master node not responding or unreachable

If your master node becomes unresponsive or unreachable, consider the following steps:

- Check the resource usage and performance of the master node’s virtual machine in the Proxmox UI. High resource usage or insufficient resources could be causing the issue.

- Verify that the control plane components (API server, etcd, controller manager, and scheduler) are running without issues. You can check their logs using kubectl logs or journalctl commands.

- Ensure that the master node’s static IP address and network configuration are correct and that there are no issues with the underlying Proxmox host or network infrastructure.

Proxmox UI not accessible or slow

If you’re experiencing issues with the Proxmox UI, such as slow loading times or unresponsiveness, consider these solutions:

- Verify that your Proxmox host has adequate resources (CPU, RAM, and storage) to handle the workloads running on the virtual machines and containers.

- Check the network connectivity between your local machine and the Proxmox host.

- Ensure that your Proxmox host is running the latest version of Proxmox VE and that all packages are up to date.

Inability to connect to Kubernetes services or applications

If you’re unable to connect to your Kubernetes services or applications, check the following:

- Verify that the Kubernetes services have been correctly configured, and their corresponding pods are running without issues.

- Ensure that the ingress controller and any load balancers are correctly set up and functioning properly.

- Check the firewall settings on both the worker nodes and the Proxmox host to ensure they allow the necessary traffic for your services and applications.

By following these troubleshooting tips, you can quickly identify and resolve common issues that may arise when running a Kubernetes cluster on Proxmox.

FAQs: Common Questions about Kubernetes on Proxmox

Kubernetes is known for being finicky at times. There are several common troubleshooting scenarios you need to be familiar with. Note the following troubleshooting section of common issues and their fixes.

Can I run Kubernetes on Proxmox LXC containers instead of virtual machines?

While it’s technically possible to run Kubernetes on Proxmox LXC containers, it’s generally not recommended due to potential compatibility issues and the differences in resource isolation between LXC containers and virtual machines. Using virtual machines for your Kubernetes nodes ensures a more stable and secure environment.

How can I set up persistent storage for my Kubernetes cluster on Proxmox?

To set up persistent storage for your Kubernetes cluster on Proxmox, you can use various storage solutions such as local storage, network-attached storage (NAS), or distributed storage systems like Ceph. You’ll need to configure the appropriate storage classes in your Kubernetes cluster to utilize these storage solutions.

Is it possible to run a multi-master Kubernetes cluster on Proxmox?

Yes, you can run a multi-master Kubernetes cluster on Proxmox by setting up a highly available control plane. This involves creating additional master nodes and configuring them with a load balancer to distribute the API server traffic. The process is more complex than setting up a single-master cluster and requires additional resources.

Can I use other container runtimes besides Docker for my Kubernetes cluster on Proxmox?

Yes, Kubernetes supports various container runtimes, such as containerd and CRI-O, in addition to Docker. You can install and configure your preferred container runtime on your Proxmox worker nodes before initializing the Kubernetes cluster.

How do I monitor my Kubernetes cluster running on Proxmox?

You can monitor your Kubernetes cluster using a combination of the Proxmox UI for virtual machine performance and resource usage, and Kubernetes-native monitoring tools such as Prometheus and Grafana for in-depth insights into your cluster’s performance and health.

Can I use Kubernetes network plugins like Calico or Weave with my Proxmox cluster?

Yes, you can use any Kubernetes-compatible network plugin with your Proxmox cluster. To use a different network plugin instead of Flannel, follow the installation instructions provided by the network plugin’s documentation, and make sure to configure the pod network CIDR accordingly during the cluster initialization process.

Are there any limitations to running Kubernetes on Proxmox compared to cloud providers like AWS, GCP, or Azure?

Running Kubernetes on Proxmox may have some limitations compared to cloud providers, such as reduced support for managed services and a potentially smaller scale. However, it offers the benefits of full control over your infrastructure, better resource utilization, and cost savings, especially for smaller deployments or testing environments.

Wrapping up

Using this guide, admins can successfully configure a new Kubernetes cluster running on top of Proxmox. Proxmox offers great capabilities from a virtualization standpoint. Kubernetes is the de facto standard in container orchestration and is used by a majority of organizations in self-hosting business-critical containerized applications and in cloud environments. Using both together offers the best of both worlds for your container workloads, allowing these to be flexible, available, and scalable.

2 comments

Thank you for the article. Can you explain in more detail why you disable swap completely?

Jonas,

Great question. It comes down to the Kubelet working correctly and is a documented step that is required when running Kubernetes. They mention possibly supporting it in the future. You can read the thread here: https://discuss.kubernetes.io/t/swap-off-why-is-it-necessary/6879/6

Brandon